检查点

Create Compute Engine instance with the necessary API access

/ 20

Install software

/ 20

Ingest USGS data

/ 20

Transform the data

/ 20

Create bucket and Store data

/ 20

Rent-a-VM to Process Earthquake Data

- GSP008

- Overview

- Setup

- Task 1. Create Compute Engine instance with the necessary API access

- Task 2. SSH into the instance

- Task 3. Install software

- Task 4. Ingest USGS data

- Task 5. Transform the data

- Task 6. Create a Cloud Storage bucket

- Task 7. Store data

- Task 8. Publish Cloud Storage files to web

- Congratulations!

GSP008

Overview

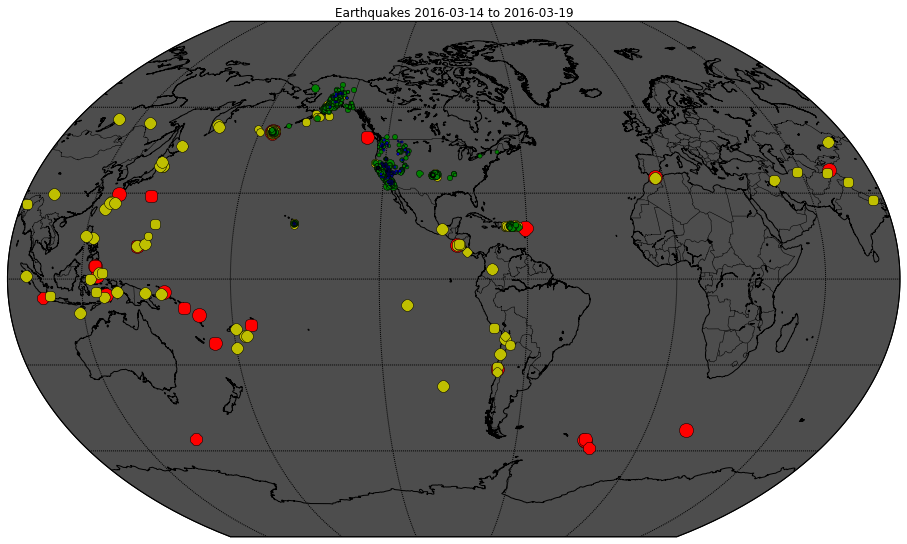

Using Google Cloud to set up a virtual machine to process earthquake data frees you from IT minutia to focus on your scientific goals. You can ingest and process data, then present the results in various formats. In this lab, you will ingest real-time earthquake data published by the United States Geological Survey (USGS) and create maps that look like the following:

In this lab you will spin up a virtual machine, access it remotely, and then manually create a pipeline to retrieve, process and publish the data.

What you will learn

In this lab, you will learn how to do the following:

- Create a Compute Engine instance with specific security permissions.

- SSH into the instance.

- Install the software package Git (for source code version control).

- Ingest data into the Compute Engine instance.

- Transform data on the Compute Engine instance.

- Store the transformed data on Cloud Storage.

- Publish Cloud Storage data to the web.

Setup

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources will be made available to you.

This hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

- Time to complete the lab---remember, once you start, you cannot pause a lab.

How to start your lab and sign in to the Google Cloud console

-

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is the Lab Details panel with the following:

- The Open Google Cloud console button

- Time remaining

- The temporary credentials that you must use for this lab

- Other information, if needed, to step through this lab

-

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account. -

If necessary, copy the Username below and paste it into the Sign in dialog.

{{{user_0.username | "Username"}}} You can also find the Username in the Lab Details panel.

-

Click Next.

-

Copy the Password below and paste it into the Welcome dialog.

{{{user_0.password | "Password"}}} You can also find the Password in the Lab Details panel.

-

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials. Note: Using your own Google Cloud account for this lab may incur extra charges. -

Click through the subsequent pages:

- Accept the terms and conditions.

- Do not add recovery options or two-factor authentication (because this is a temporary account).

- Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

Task 1. Create Compute Engine instance with the necessary API access

-

To create a Compute Engine instance, from the Navigation menu click on Compute Engine > VM instances:

-

Click Create Instance and wait for the "Create an instance" form to load.

-

Use default Region and Zone for creating the instance:

-

In the Boot Disk section, click Change.

-

Change the Version to Debian GNU/Linux 10 (buster).

-

Leave the other settings as is and click Select.

-

Change Identify API access for the Compute Engine default service account to Allow full access to all Cloud APIs, then click Create.

You'll see a green circle with a check when the instance is created.

Click Check my progress below to verify you're on track in this lab.

Task 2. SSH into the instance

You can remotely access your Compute Engine instance using Secure Shell (SSH):

- Click the SSH button next to your newly created VM:

The VM instance details displays.

SSH keys are automatically transferred; no extra software is needed to ssh directly from the browser.

- To find some information about the Compute Engine instance, type the following into the command-line:

You should see a similar output:

Task 3. Install software

- Still in the SSH window, enter the following commands:

-

Enter Y when asked if it's acceptable to use additional disk space.

-

Verify that git is now installed:

You should see a similar output:

Click Check my progress below to verify you're on track in this lab.

Task 4. Ingest USGS data

- Still in the SSH window, enter the following command to download the code from GitHub:

- Navigate to the folder corresponding to this lab:

- Examine the

ingestcode usingless:

The less command allows you to view the file (Press the spacebar to scroll down; the letter b to back up a page; the letter q to quit).

- Enter q to exit the editor.

The program ingest.sh downloads a dataset of earthquakes in the past 7 days from the US Geological Survey. Notice where the file is downloaded to (disk or Cloud Storage.)

- Enter the following command to run the

ingestcode:

Click Check my progress below to verify you're on track in this lab.

Task 5. Transform the data

You will use a Python program to transform the raw data into a map of earthquake activity:

The transformation code is explained in detail in this notebook.

Feel free to read the narrative to understand what the transformation code does. The notebook itself was written in Datalab, a Google Cloud product that you will use later in this set of labs.

- Still in the Compute Engine instance, enter the following command to install the necessary Python packages on the Compute Engine instance:

- Enter the following command to run the transformation code:

- You will notice a new image file

earthquakes.pngin your current directory if you enter the following command:

Click Check my progress below to verify you're on track in this lab.

Task 6. Create a Cloud Storage bucket

Return to the Cloud Console for this step.

-

From the Navigation menu select Cloud Storage:

-

Click on + Create, then create your bucket with the following characteristics:

- Choose a globally unique bucket name (but not a name you'd like to use for your own projects), then click Continue.

- You can leave it as Multi-Regional, or improve speed and reduce costs by making it Regional (choose the same region as your Compute Engine instance).

- For

Choose how to control access to objects, uncheck the box for Enforce public access prevention on this bucket and select Fine-grained forAccess control.

- Then, click Create.

Take note of your bucket name. You will insert its name whenever the instructions ask for <YOUR-BUCKET>.

Task 7. Store data

You will now learn how to store the original and transformed data in Cloud Storage.

- In the SSH window of the Compute Engine instance, run the following, changing

<YOUR-BUCKET>to the bucket name you created earlier:

This command copies the files to your bucket in Cloud Storage.

- Return to the Cloud Console and on the Storage Browser page click on the Refresh button near the top of the page. Now click on the bucket name then the

/earthquakesfolder.

You should now see the following three files in the earthquakes folder:

- earthquakes.csv

- earthquakes.htm

- earthquakes.png

Click Check my progress below to verify you're on track in this lab.

Task 8. Publish Cloud Storage files to web

You will now publish the files in your bucket to the web.

-

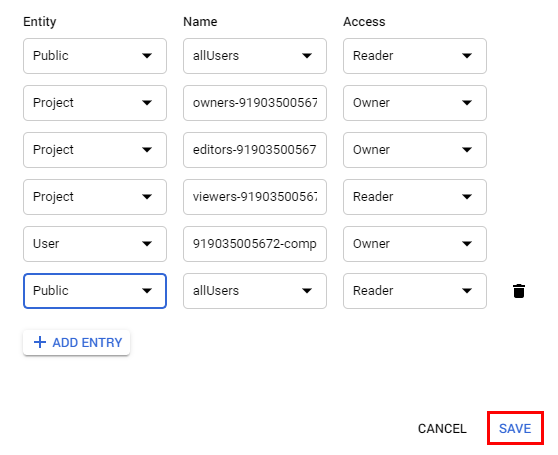

To create a publicly accessible URL for the files, click the three dots at the end of the

earthquakes.htmfile and select Edit access from the dropdown menu. -

In the overlay that appears, click the + Add entry button.

-

Add a permission for all users by entering in the following:

- Select Public for the Entity.

- Enter allUsers for the Name.

- Select Reader for the Access.

- Then click Save.

-

Repeat the above steps for

earthquakes.png. -

Click on the name of a file and notice the URL of the published Cloud Storage file and how it relates to your bucket name and content. It should resemble the following:

- If you click on the

earthquakes.pngimage file and then on the public URL, a new tab will be opened with the following image loaded:

- Go ahead and close the SSH window.

Congratulations!

You have completed this lab and learned how to spin up a compute engine instance, access it remotely, then manually create a pipeline to retrieve, process and publish the data.

Finish Your Quest

This self-paced lab is part of the Scientific Data Processing quest. A quest is a series of related labs that form a learning path. Completing this quest earns you a badge to recognize your achievement. You can make your badge or badges public and link to them in your online resume or social media account. Enroll in this quest or any quest that contains this lab and get immediate completion credit. See the Google Cloud Skills Boost catalog to see all available quests.

Take Your Next Lab

Continue your Quest with Weather Data in BigQuery, or try Distributed Image Processing in Cloud Dataproc

Next steps / Learn more

Here are some follow-up steps:

- Check out USGS.gov for complete information. For example:

- The latest 20 large earthquakes in the world

- Geodetic data

- Hazard assessment data and models, and more.

- Sign up for automatic notifications of earthquakes in your area.

Google Cloud training and certification

...helps you make the most of Google Cloud technologies. Our classes include technical skills and best practices to help you get up to speed quickly and continue your learning journey. We offer fundamental to advanced level training, with on-demand, live, and virtual options to suit your busy schedule. Certifications help you validate and prove your skill and expertise in Google Cloud technologies.

Manual Last Updated October 16, 2023

Lab Last Tested October 19, 2023

Copyright 2024 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.